BigQuery Connections

BigQuery is a fully managed, serverless data warehouse hosted in Google Cloud Platform. BigQuery can automatically scale to read, write, and transform petabytes of data. This section describes how to interact with BigQuery through a connection in Dataprep by Trifacta.

For more information on BigQuery, see https://cloud.google.com/bigquery.

Enable

A project owner does not need to enable access to BigQuery. Access to BigQuery is governed by permissions.

IAM role

An IAM role is used by the product to enable access for it to Google Cloud resources. The default IAM role that is assigned to each user in the project is granted access to read and write data in BigQuery.

If you are using a custom IAM role to access Google Cloud resources, you must ensure that the role contains the appropriate permissions to read and write data in BigQuery.

For more information, see Required Dataprep User Permissions.

Service account

A service account is used by the product to run jobs in Dataflow. The default service account that is assigned to each user in the project is granted access to BigQuery.

If you are using a custom service account, you must ensure that the account contains the appropriate permissions to read and write data in BigQuery.

For more information, see Google Service Account Management.

Limitations

Note

The platform supports a single, global connection to BigQuery. All users must use this connection.

Create BigQuery Connection

You do not need to create a connection to BigQuery. It is accessible based on permissions. See above.

Create via API

You cannot create BigQuery connections through the APIs.

Using BigQuery Connections

Note

JSON data stored in BigQuery is not supported for ingest or publication.

Uses of BigQuery

Dataprep by Trifacta can use BigQuery for the following tasks:

Create datasets by reading from BigQuery tables.

Write data to BigQuery.

Note

If you are reading data from BigQuery and writing job results for it back to BigQuery, both locations must be in the same geographic region.

Before you begin using BigQuery

Your BigQuery administrator must enable BigQuery for your Dataprep by Trifacta project.

Your BigQuery administrator should provide datasets or locations and access for storing datasets within BigQuery.

Users should know where shared data is located and where personal data can be saved without interfering with or confusing other users.

Note

Dataprep by Trifacta does not modify source data in BigQuery. Datasets sourced from BigQuery are read without modification from their source locations.

For more information on how data types are converted to and from BigQuery sources, see BigQuery Data Type Conversions.

Support for CMEK

Use of Customer Managed Encryption Keys (CMEK) is supported and is transparent to the user. For more information, see https://cloud.google.com/kms/docs/cmek.

Reading from tables in BigQuery

You can create a dataset from a table stored in BigQuery.

Standard SQL

Tables

Views

Note

Standard SQL syntax is supported. Legacy SQL syntax is not supported.

Nested tables are supported.

Partitioned tables are supported, but these must include a schema.

Note

Reading from external tables or from tables without a schema is not supported.

Note

Dataprep by Trifacta only supports native BigQuery tables and views. Schema information is required. Dataprep by Trifacta does not support BigQuery sources that reference data stored in Google Suite.

Creating datasets with custom SQL

Note

This feature may not be available in all product editions. For more information on available features, see Compare Editions.

You can create your datasets by generating custom SQL SELECT statements on your BigQuery tables.

Tip

By pre-filtering your datasets using custom SQL, you can reduce the volume of data that is transferred from the database, which can significantly improve import performance.

For more information, see Create Dataset with SQL

Reading from other projects

If you have access to multiple projects:

Reading: you can browse for BigQuery tables in other projects from which to read.

Writing: you can browse for BigQuery databases that are accessible from other projects to which you have read and write access.

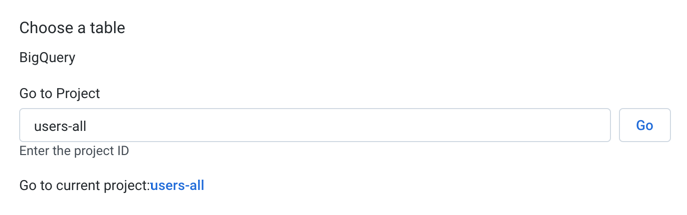

Figure: Enter a project identifier to browse for BigQuery databases and tables

Enter the project identifier in the textbox, and click Go.

Tip

The identifiers for your projects are available from the Projects menu in the toolbar. See Projects Menu.

Tip

You can paste project identifiers from publicly available projects to read from them.

Writing to BigQuery

You can write datasets to BigQuery as part of your job definition.

Note

In some environments, users may not be permitted the bigquery.datasets.create permission, and Dataflow jobs on BigQuery sources fail. As a workaround, an administrator can define a BigQuery dataset for use for these jobs. This dataset is used by Dataprep by Trifacta for storing temporary tables of intermediate query results when running Dataflow jobs on BigQuery sources, which eliminates the need for the bigquery.datasets.create permission in the service account. For more information on defining this BigQuery query dataset, see Dataprep Project Settings Page.

Note

Object data types are written back to BigQuery as string values. Some Array data types are written as arrays. For more information, see BigQuery Data Type Conversions.

Note

BigQuery does not support destinations with a dot (.) in the name. For more information, see https://cloud.google.com/bigquery/docs/tables#table_naming.

Tip

Optionally, you can choose to merge your results to an existing BigQuery table. Based on a specified set of matching columns, you can define the rows to update from your source.

Note

By default, data is published to BigQuery using strict data type matching rules. When there are mismatches between Alteryx data types and BigQuery data types, the publication fails. This setting is defined on a per-output basis. For more information, see BigQuery Table Settings.

Tip

During import from Cloud Storage, you can choose to show hidden files. These files include profiler files generated during a job run. These files can be re-imported into the product and then published to BigQuery for additional analysis. Please note that BigQuery does not support names with a dot in them. For more information, see Import Data Page.

For more information on creating a publishing action to BigQuery, see BigQuery Table Settings.

Writing to other projects

If you have write access to other projects, you can write to BigQuery tables that are associated with those projects. You must have write access to any table to which you are writing.

You can specify the target table as part of the job specification. See Run Job Page.

SQL Syntax

The following syntax requirements apply to this connection.

Object delimiters:

Back-ticks around datasets (database/table combination)

No quotes around column/field references

Double-quotes can be used around mock data in a SELECT statement

Example syntax:

SELECT column1,column2 FROM `databaseName.tableName`

For more information, see https://cloud.google.com/bigquery/docs/reference/standard-sql/query-syntax.

For more information on SQL in general, see Supported SQL Syntax.

Using BigQuery as a running environment

If your source data and output targets are located in BigQuery, you may be able to execute your recipe transformations inside BigQuery. For more information, see BigQuery Running Environment.

BigQuery Data Type Conversions

For more information, see BigQuery Data Type Conversions.

Reference

Enable: Automatically enabled.

Create New Connection: n/a