View for Outputs

Associated with each recipe is one or more outputs. These publishing destinations can be configured through the context panel in Flow View. Through outputs, you can execute and track jobs for the related recipe.

|

Figure: Output icon

In the context panel, the following options are available:

Run: Click Run to queue for immediate execution a job for the manual destinations. You can track the progress and results of this task through the Jobs tab.

In the context menu:

Delete Output: Remove this output from the flow. This operation cannot be undone. Removing an output does not remove the jobs associated with the output. You can continue working with those executed jobs. See Job History Page.

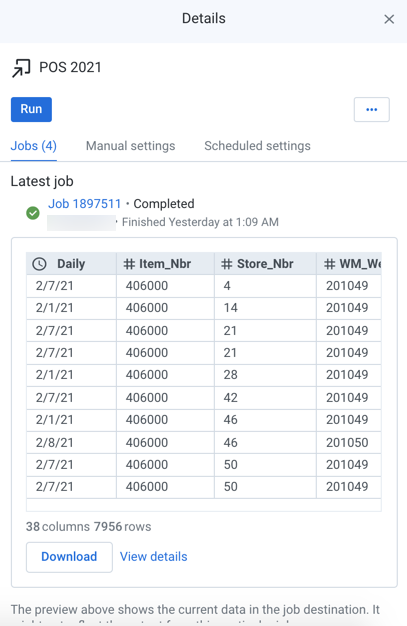

Jobs tab

Figure: Jobs tab

Each entry in the Jobs tab identifies a job that has been Queued, Completed, or In Progress for the selected output. You can track the progress, success, or failure of execution. If you have executed no jobs yet, the Jobs tab is empty.

For the latest job:

You can preview the job results. Click the Preview pane to open the results in a separate window.

Note

The Preview pane reflects the state of the data at the location specified for the output. If other jobs are also writing to this location, the state of the data may not reflect the output for this specific job.

Note

This section is not displayed if the job fails. The Preview may not be available if errors occur.

To download the results from the output location, click the Download button.

Note

This button may not be available for some successful jobs.

To view job details, click View details. For more information, see Job Details Page.

You can also view the previous jobs that have been executed for the selected output.

Tip

When you hover the mouse over a job, you can review details of the job in progress. For more information, see Overview of Job Monitoring.

When a job has finished execution, click the link to the job to view results.

Actions:

For a job, you can do the following:

Click the job link to view the results of your completed job. For more information, see Job Details Page.

Cancel job: Select to cancel a job that is currently being executed

Note

If you do not have permission to cancel a Dataflow job, the appropriate permissions must be added to your IAM role by an administrator. The default IAM role available with the product has these permissions, but these permissions may not be present if you are using a custom or personal IAM role in your account. For more information on IAM permissions, see Required Dataprep User Permissions.

Note

In some cases, the product is unable to cancel the job from the application. In these cases, click View in Dataflow, and from there you can cancel the job in progress.

View Dataflow Job:View the job onDataflow.If the job failed, you can review error information throughDataflow.

View on BigQuery: This option is available if you executed the job on BigQuery.

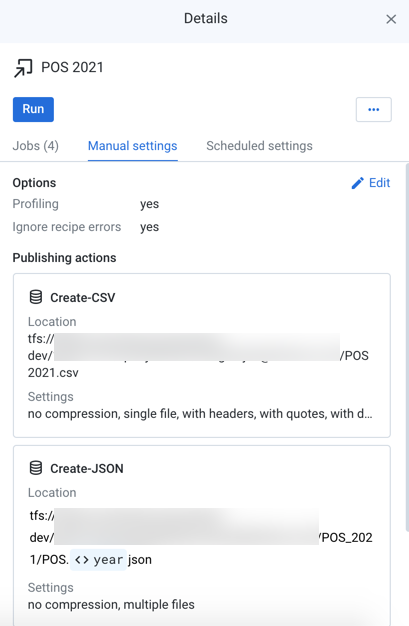

Manual Settings tab

The Manual Settings tab contains configured outputs for manual execution of jobs through the application interface.

Figure: Manual Settings tab

Options:

Environment: The running environment where the job is configured to be executed.

Profiling: If profiling is enabled for this destination, this value is set to yes.

Ignore recipe errors: When enabled, non-fatal errors encountered in a recipe during job execution are ignored. These errors are available for review in the Job Details page.

To create a new manual destination, click Add.

To edit the current manual setting, click Edit.

For more information on these settings, see Run Job Page.

Publishing actions:

For the manual destination, this section outlines any additional publishing actions to be taken when generating the output.

Location: Full path to the target location.

If output parameters have been created for the destination, you can review their names in the path. For more information, see Overview of Parameterization.

SQL scripts:

Note

The SQL Scripts feature may need to be enabled in your environment by an administrator.

Before or after a job, you can specify one or more SQL scripts to execute. These scripts can be used for tasks like staging data for job execution or to updating an audit table on job execution. For more information, see SQL Scripts Panel.

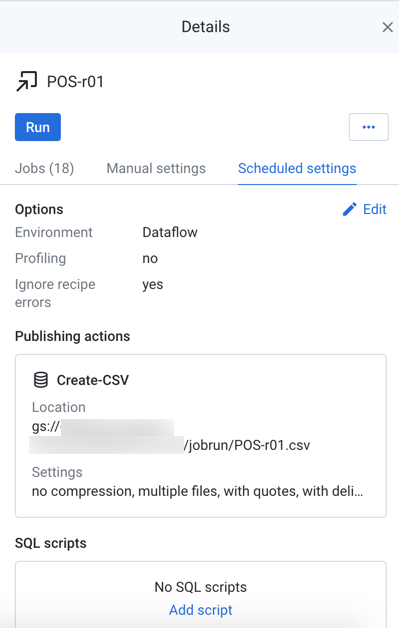

Scheduled Settings tab

If a schedule has been defined for the flow, you can define a separate set of destinations, which are populated whenever the schedule is triggered and the associated recipe is successfully executed. If any input datasets are missing, the job is not run.

Note

The Scheduling feature may need to be enabled in your environment by an administrator.

Figure: Scheduled Settings tab

Note

Flow collaborators cannot modify publishing destinations.

See Add Schedule Dialog.

For more information, see Overview of Scheduling.