Import Flow

An exported flow can be imported into Dataprep by Trifacta.

Limitations

Note

You cannot import flows that were exported before Release 6.8.

Note

You cannot import flows into a version of the product that is earlier than the one from which you exported it. For example, if you develop a flow on free Designer Cloud Powered by Trifacta Educational, which is updated frequently, you may not be able to import it into other editions of the product, which are updated less frequently.

Imported flows do not contain the following objects:

Note

Depending on the import environment, some objects in the flow definition may be incompatible. For example, the connection type may not be valid, or a datasource may not be reachable. In these cases, the objects may be removed from the flow, or you may have to fix a reference in the object definition. After import, you should review the objects in the flow to verify.

Reference datasets

Note

Reference dataset objects may refer to connections and parameters that are not specifically part of the flow being imported. These references must be remapped in the new project or workspace.

Samples

Connections

Tip

When you import an exported flow, you can perform a remapping of the connections listed in the flow to connections in the new project or workspace. Details are below.

Imported datasets that are ingested into backend storage for Dataprep by Trifacta may be broken after the flow has been imported into another instance. These datasets must be reconnected to their source. You cannot use import mapping rules to reconnect these data sources. This issue applies to the following data sources:

Microsoft Excel workbooks and worksheets. See Import Excel Data.

Google Sheets. See Import Google Sheets Data.

PDF tables. See Import PDF Data.

Import into a new Google Cloud Platform project

When a flow is imported from one Google Cloud Platform (GCP) project into another GCP project, the underlying data must be accessible through the imported flow in the new GCP project. This means:

Source data must be accessible to all users who have access to the imported flow. Please see Cross-Project Data Access for more details.

If the flow is shared with other users of the project, they must have access to the underlying data.

Samples must be re-created, since they are not included in the import.

Note

If the above requirements are not met for flows imported into a different project, users may experience 403 Access Forbidden errors when attempting to connect to the flow or its underlying assets.

Note

If you import a flow from Premium Edition into Standard Edition, you may encounter soft validation errors during job execution. If the imported flow uses custom VPC mode, then the job execution may fail, since network may be inaccessible. The workaround is 1) to set the VPC mode to Auto or 2) set accessible VPC options before you run your job. See Runtime Dataflow Execution Settings.

Note

If your imported flow contains an output object where dataflow execution overrides have been specified, then the overrides are applied to any jobs executed in the project where the flow was imported. Property values that do not appear in the imported output object are taken from your execution settings. See Runtime Dataflow Execution Settings.

Before You Begin

Dry-run import

Tip

You can gather some of the following information by performing a dry run of importing the flow. In the Connections Mapping dialog, you can review the objects that need to be mapped and try to identify items that need to be fixed. Make sure you cancel out of the import instead of confirming it.

Import

Note

If the exported ZIP file contains a single JSON file, you can just import the JSON file. If the export ZIP also contains other artifact files, you must import the whole flow definition as a ZIP file. For best results, import the entire ZIP file.

Import flow ZIP file

Steps:

Export the flow from the source system. See Export Flow.

Login to the import system, if needed.

Click Flows.

From the context menu in the Flow page, select Import Flow.

Tip

You can import multiple flows (ZIP files) through the file browser or through drag-and-drop. Press

CTRL/COMMAND+ click orSHIFT+ click to select multiple files for import.Select the ZIP file containing the exported flow. Click Open.

Flow mappings

When you import a flow into a new project or workspace, you may need to remap the connections and environment parameters to corresponding objects from the import.

Note

This feature may need to be enabled in your project by an administrator. For more information, see Dataprep Project Settings Page.

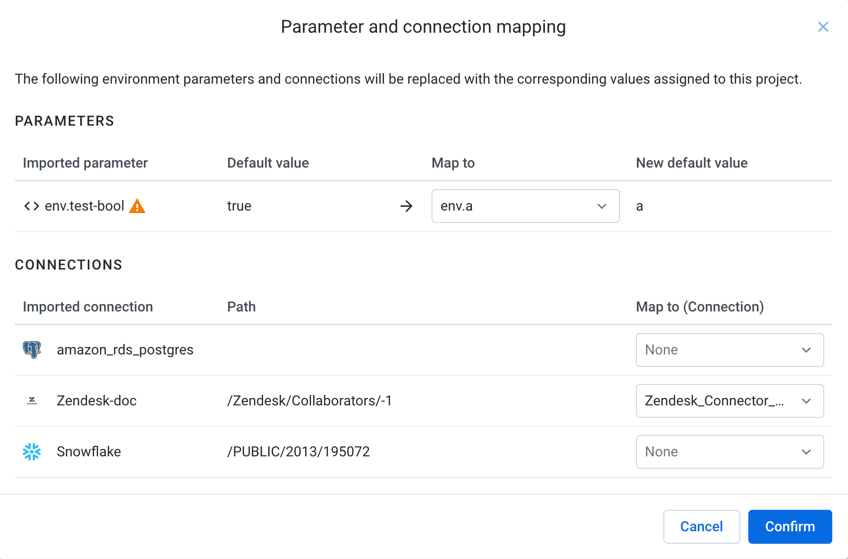

Figure: Parameter and connection mapping dialog

Tip

For future reference, it may be helpful to take a screenshot of this screen, if you cannot complete all mappings through the dialog.

Review environment parameter mappings

If the exported flow contains references to environment parameters, which can appear in paths to imported datasets or output objects, then these references must be mapped to environment parameters in the new project or workspace. In the Parameters pane, you can review the set of environment parameters referenced in the exported flow. These must be mapped to environment parameters in the new project or workspace. A Warning icon next to an environment parameter indicates that the parameter does not exist as named in the import environment.

Note

Environment parameters can be created by an administrator only.

For each environment parameter, you can do one of the following:

Select the environment parameter to which to map it from the drop-down on the right side, OR

Write down the name and default value of the environment parameter for creation in the import environment.

For more information on creating environment parameters, see Environment Parameters Page.

For more information on parameters in general, see Overview of Parameterization.

Review connection mappings

Any connection referenced in the exported flow or reference datasets must be mapped to a connection in the import workspace or project.

Note

The exported flow may contain references to upstream flows and sources. These references may need to mapped during the import process.

Note

After import, you may be required to resolve connectivity issues, if the remapped connections do not provide the required access to sources and outputs.

For each connection:

Select the connection to which to map it from the drop-down on the right side, OR

Write down the name of the source connection. In the source flow, you must acquire the connection information and use it to create a replacement connection in the new project or workspace.

Connection type is supported in product edition of target workspace or project.

Access to same database or datastore. Access must be read or read/write depending on flow.

Credentials in use are same or have same access levels.

For more information, see Connections Page.

Review SQL script connection mappings

If your flow contains SQL scripts that are executed before or after job execution, you can remap the connections from the old environment into the new one.

Finishing up

To complete the import process, click Confirm.The flow is imported and available for use in the Flows page.

Post-import

If you were unable to complete mappings through the dialog:

You may need to reconnect your imported datasets to data sources that are available in the new workspace or project. See Reconnect Flow to Source Data.

You may also need to reconnect your outputs. See Reconnect Flow to Outputs.

An administrator may need to create environment parameters for you. See Environment Parameters Page.

Samples are not included in an exported flow. Samples must be recreated in the new project or workspace. See Samples Panel.

Note

If you have imported a flow from an earlier version of the application, you may receive warnings of schema drift during job execution when there have been no changes to the underlying schema. This is a known issue. The workaround is to create a new version of the underlying imported dataset and use it in the imported flow.